Yes But ...

So essentially,

VLMs struggle to understand satire

Paper: YesBut: A High-Quality Annotated Multimodal Dataset for evaluating Satire Comprehension capability of Vision-Language Models (18 Pages)

Researchers from Indian Institute of Technology Kharagpur, University of Massachusetts Amherst and Haldia Institute of Technology are interested in model's ability to detect, understand, and comprehend satire in images.

Hmm..What’s the background?

Previous research on satire and humor in NLP and Computer Vision has primarily focused on detecting satire in text and multimodal scenarios like meme captioning.

Existing datasets, such as MemeCap and MET-Meme, do not comprehensively evaluate satire and humor detection, understanding, and comprehension capabilities of VL Models in Multimodal Scenarios.

Other image datasets, like WHOOPS, comprise unconventional images that challenge commonsense expectations but are specifically designed for tasks like image captioning, image-text matching, and visual question answering.

Ok, So what is proposed in the research paper?

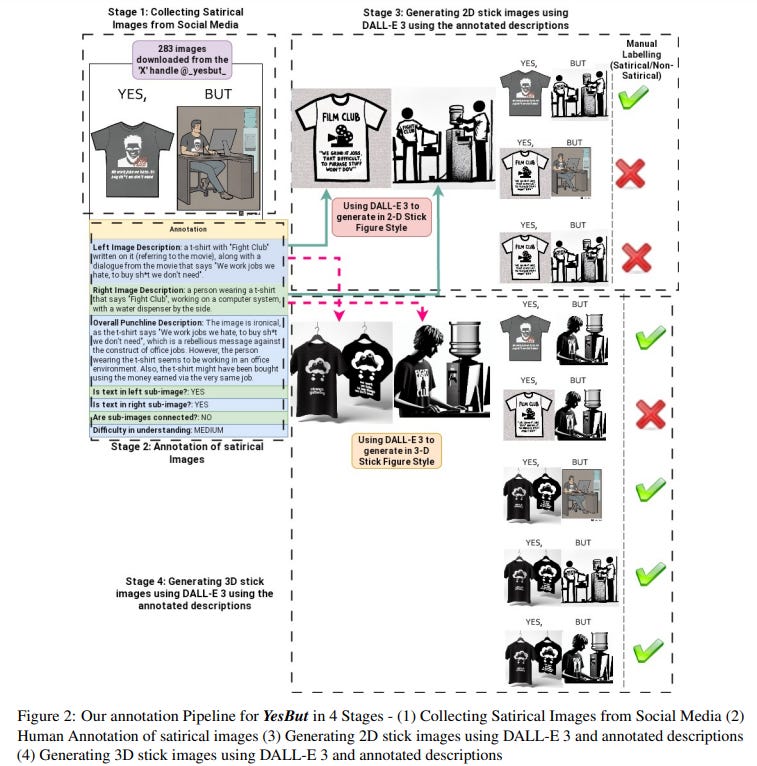

YesBut is a new dataset for evaluating the ability of Vision-Language Models (VLMs) to understand satire in images. The dataset contains satirical and non-satirical images, many of which do not contain text.

Three tasks are proposed to evaluate VLMs using the YesBut dataset: Satirical Image Detection (classifying an image as satirical or not), Satirical Image Understanding (generating descriptions of why an image is satirical), and Satirical Image Completion (selecting an image that would make a pair of images satirical).

The authors conclude that the poor performance of these VLMs on the YesBut tasks suggests that current VLMs struggle to understand satire in images, especially when the images do not contain text or use multiple artistic styles.

What’s next?

The researchers plan to extend their work to languages other than English.

So essentially,

VLMs struggle to understand satire

Learned something new? Consider sharing with your friends!