Optimization of Compound AI

Compound AI is better than Simple AI

Paper: LLM-based Optimization of Compound AI Systems: A Survey (14 Pages)

Researchers from Tsinghua University, Pennsylvania State University and Xi’an Jiaotong University are interested in development of comprehensive response about the background, important wins, and future work of LLM-based optimization for Compound AI.

Hmm..What’s the background?

Compound AI systems, also referred to as Language Model Programs or LLM agents, combine multiple calls to a Large Language Model (LLM) and integrate it with various components. These components include retrievers, code interpreters, and other tools.

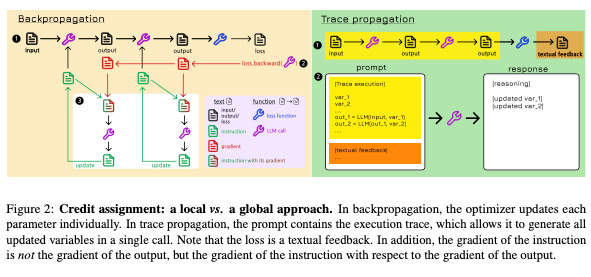

LLM-based optimization (also known as compile-time optimization) offers an efficient alternative to gradient-based and reinforcement learning techniques. This method leverages an LLM to optimize the parameters of a compound AI system, minimizing empirical risk on a training dataset. Instead of manually adjusting parameters, the focus shifts to prompting an LLM optimizer to generate optimal parameters based on the training data.

Ok, So what is proposed in the research paper?

LLM-based optimization bypasses the need for computationally intensive gradient calculations and can effectively handle complex, heterogeneous parameters like instructions and tool implementations in a single call.

Compound AI systems allow for detailed feedback at different stages of the reasoning process. Instead of relying solely on the final output, intermediate outputs can be analyzed and improved, fostering a deeper understanding of the system's decision-making process.

By decomposing complex tasks into smaller, more manageable subtasks, and potentially utilizing smaller, specialized LLMs, compound AI systems offer increased transparency and control. This can mitigate some of the risks associated with unpredictable emergent behaviors often observed in large monolithic LLMs.

What’s next?

Multimodal Integration: Expanding the scope of LLM-based optimization beyond language-based systems to incorporate multimodal approaches, such as vision-language models

Finetuning Open-Source LLMs: Exploring the potential of finetuning the underlying open-source LLMs used in compound AI systems

Advanced Optimization Techniques: Investigating the incorporation of more sophisticated optimization techniques, such as architecture search and meta-optimizers, to further enhance the design and performance of both the compound AI systems and the optimization process itself

Compound AI is better than Simple AI

Learned something new? Consider sharing it!