On-the-Fly Persona Alignment with TPO

Test-time Preference Optimization implies better persona aligned responses

Paper: Test-Time Preference Optimization: On-the-Fly Alignment via Iterative Textual Feedback

Researchers from Shanghai AI Laboratory and The Chinese University of Hong Kong are interested in aligning and optimizing preferences with AI at inference-time.

Hmm..What’s the background?

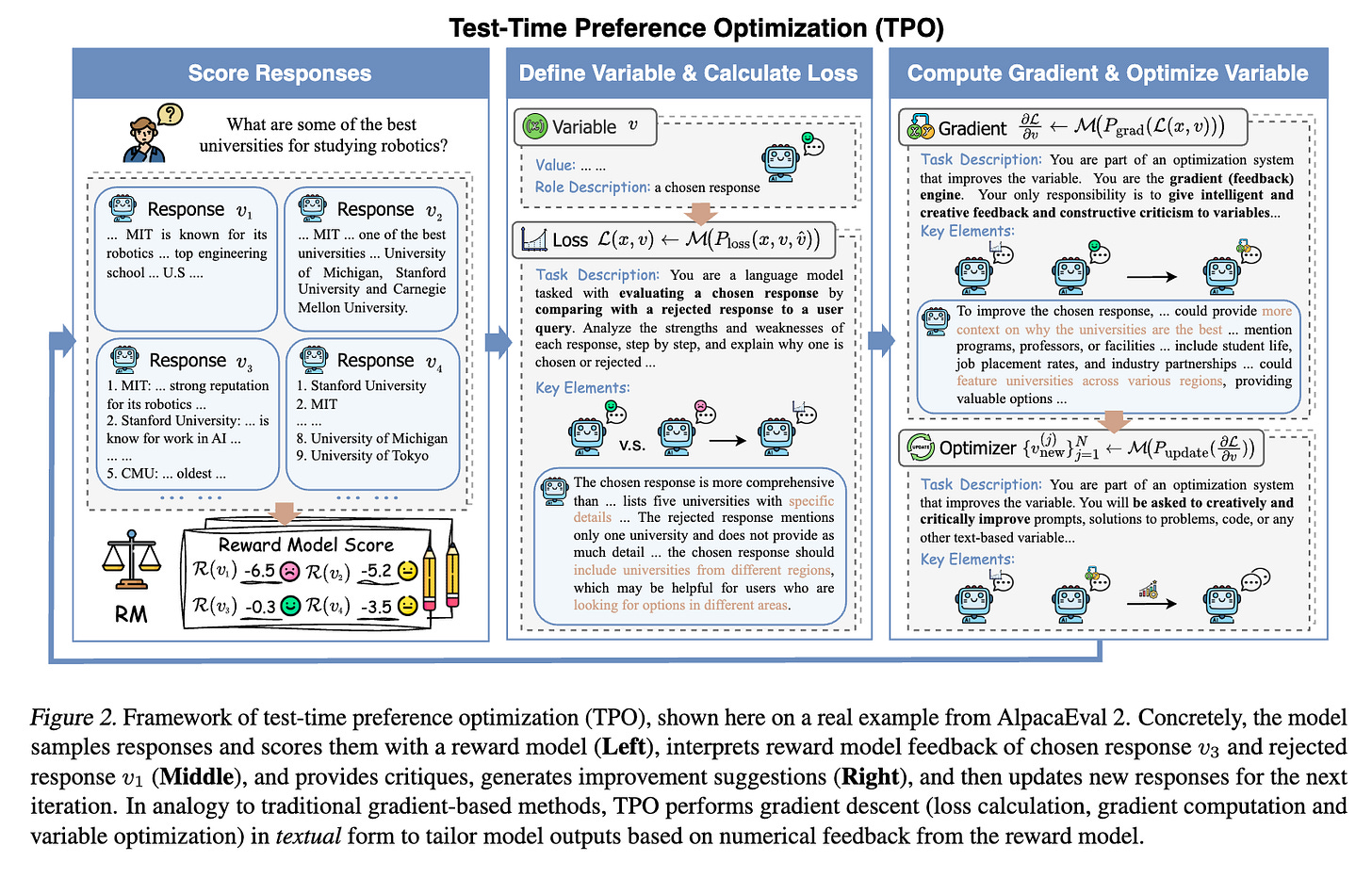

This paper develops on the idea of Test-time Preference Optimization (TPO). Test-time Preference Optimization (TPO) is a method that refines large language model (LLM) outputs during inference, without updating the model's parameters, by aligning with human preferences through iterative textual feedback. This approach contrasts with traditional training-time preference optimization methods like Reinforcement Learning from Human Feedback (RLHF) and Direct Preference Optimization (DPO), which require iterative retraining and gradient-based updates of model parameters.

So what is proposed in the research paper?

Here are the main insights:

TPO leverages the LLM’s inherent ability to interpret and act upon reward signals, translating them into textual critiques and improvements

TPO can enable unaligned models to match or surpass the performance of their aligned counterparts after just a few optimization steps

TPO enhances inference stability, which makes models less prone to generating unexpected or unsafe responses

What’s next?

Looking ahead, research in this area could refine textual interaction protocols for specific tasks and explore more advanced reward models. While TPO demonstrates scaling at test time with respect to number of samples and number of optimization steps, further research could investigate scaling TPO in other ways.

Test-time Preference Optimization implies better persona aligned responses

Learned something new? Consider sharing it!