No Q* yet but Small Models have R* 🌟

So essentially,

Small Language Models are have better complex reasoning without fine tuning or prompting using rStar!

Paper: Mutual Reasoning Makes Smaller LLMs Stronger Problem-Solvers (17 Pages)

Researchers from Microsoft Asia and Harvard are introducing upgrading complex reasoning in Small Language Models.

Hmm..What’s the background?

SLMs often struggle to effectively explore the range of possible solutions during reasoning. They may get stuck in a loop of considering low-quality reasoning steps, even after multiple attempts. Even when SLMs manage to explore high-quality reasoning steps, they struggle to figure out which steps are superior or correct of final answers, making it difficult to guide the self-exploration process.

Ok, So what is proposed in the research paper?

The researchers propose a novel approach called rStar, or Self-play muTuAl Reasoning, to enhance the reasoning abilities of smaller language models (SLMs). RStar aims to overcome the limitations of SLMs in complex reasoning tasks by focusing on two key aspects:

Enhancing Solution Space Exploration through Diverse Reasoning Actions: Recognizing that relying on a single type of reasoning action can limit the exploration of potential solutions, rStar introduces a richer set of five human-like reasoning actions. These actions enable the SLM to:

Propose single-step thoughts

Propose the remaining thought steps

Propose the next sub-question along with its answer

Re-answer a sub-question using few-shot Chain-of-Thought (CoT) prompting

Rephrase the question or sub-question for clarity

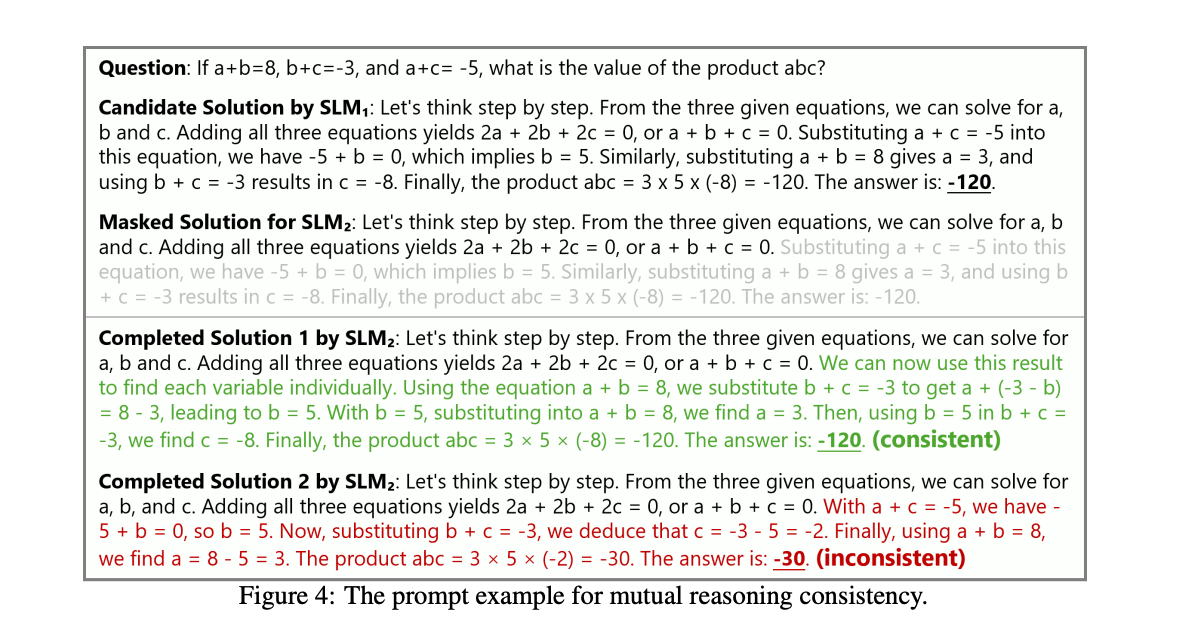

Improving Reasoning Trajectory Selection with Mutual Consistency: To address the challenge of evaluating reasoning steps and selecting the most promising solution path, rStar incorporates a unique "mutual consistency" mechanism. This involves using a second SLM as a discriminator to provide unsupervised feedback on the candidate reasoning trajectories generated by the first SLM

SLMs, such as LLaMA2-7B, already exhibit strong reasoning capabilities prior to domain specialized supervised fine-tuning. rStar achieves state-of-the-art performance across five SLMs and five diverse reasoning tasks, substantially outperforming existing multi-round prompting and self-improvement approaches.

What’s next?

Future work on rStar involves significant inference costs due to multiple rollouts and model calls, especially for larger datasets. This suggests that one area for future exploration could be optimizing rStar's efficiency by reducing its computational cost.

So essentially,

Small Language Models are getting better at complex reasoning without fine tuning or prompting using rStar

Learned something new? Consider sharing with your friends!