LongRAG with Long Retriever and Long Reader

So essentially,

LongRAG addresses the limitations of traditional RAG by using long retrieval units

Paper: LongRAG: Enhancing Retrieval-Augmented Generation with Long-context LLMs (13 Pages)

Github: https://tiger-ai-lab.github.io/LongRAG/

Researchers from University of Waterloo want to make RAG better. Retrieval-Augmented Generation (RAG) methods enhance large language models (LLMs) by using a separate retrieval component to access information from an external document. This paper introduces a system of long retrievers and long readers that outperform traditional RAG models by processing entire Wikipedia documents or groups of related documents as single units.

Hmm..What’s the background?

Traditional retrieval-augmented generation (RAG) methods typically use short retrieval units, such as 100-word passages, which can lead to sub-optimal performance. Previous research has focused on enhancing different aspects of RAG, such as improving the retriever, enhancing the reader, or jointly fine-tuning both components. However, the impact of using long-context LLMs and long retrieval units in RAG remained under explored.

Ok, So what is proposed in the research paper?

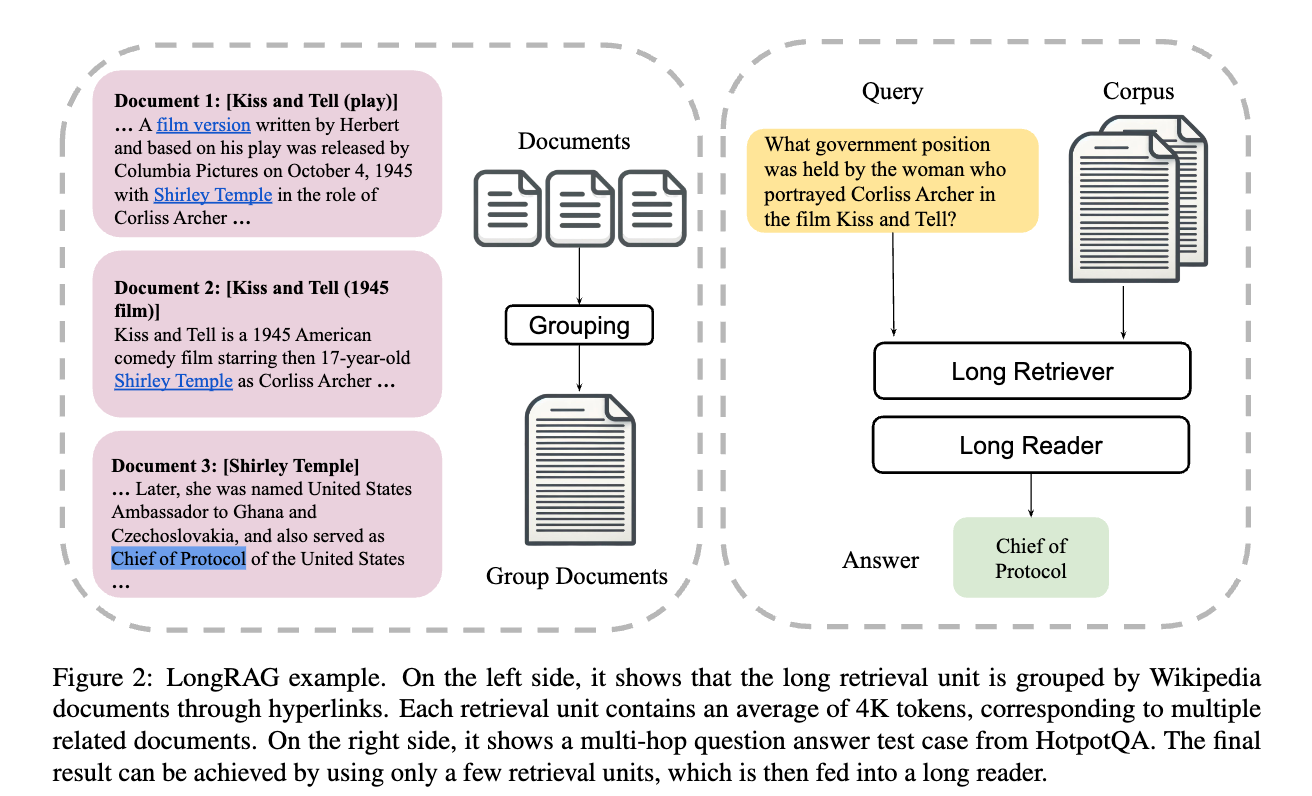

The LongRAG framework consists of three key designs that aim to improve the efficiency and effectiveness of retrieval-augmented generation for open-domain question answering tasks:

Long Retrieval Unit: Instead of using short text passages as retrieval units, LongRAG uses entire Wikipedia documents or groups of related documents to construct long retrieval units of more than 4,000 tokens. This reduces the corpus size, improves information completeness, and makes retrieval easier.

Long Retriever: The long retriever identifies coarsely relevant information for a given query by searching through all long retrieval units in the corpus. The top four to eight retrieval units are concatenated to form a long context.

Long Reader: The long reader, typically a long-context LLM like Gemini or GPT-4, receives the concatenated retrievals (around 30,000 tokens) and the question as input. It then reasons over the long context and generates the final answer.

For short contexts (less than 1,000 tokens), the reader extracts the answer directly from the retrieved context. For long contexts (over 4,000 tokens), LongRAG uses a two-turn approach. First, it generates a long answer based on the context and question. Then, it extracts a concise short answer from the long answer.

What’s next?

Current limitations in long embedding models necessitate the use of approximation methods to encode long retrieval units. Advancements in this area would improve performance and potentially reduce memory consumption. Investigating the impact of different prompt engineering techniques for the long reader could further optimize LongRAG's answer extraction process and evaluating LongRAG's performance on a wider range of knowledge-intensive NLP tasks beyond open-domain question answering would provide a more comprehensive understanding of its capabilities and limitations.

So essentially,

LongRAG addresses the limitations of traditional RAG by using long retrieval units