Depth in Apple Photos

We can get depth maps from normal photos in less than a second

Paper: Depth Pro: Sharp Monocular Metric Depth in Less Than a Second (33 Pages)

Github: https://github.com/apple/ml-depth-pro

Researchers from Apple are interested in high-resolution (2.25-megapixel) metric depth maps from normal pictures.

Hmm..What’s the background?

Zero-shot monocular depth estimation is becoming increasingly important for applications like image editing, view synthesis, and image generation. Existing methods struggle to produce metric depth maps (with absolute scale) in a zero-shot setting, while others lack the resolution and fine-grained detail needed for realistic results. Some are also computationally intensive and have high latency.

Ok, So what is proposed in the research paper?

The paper presents:

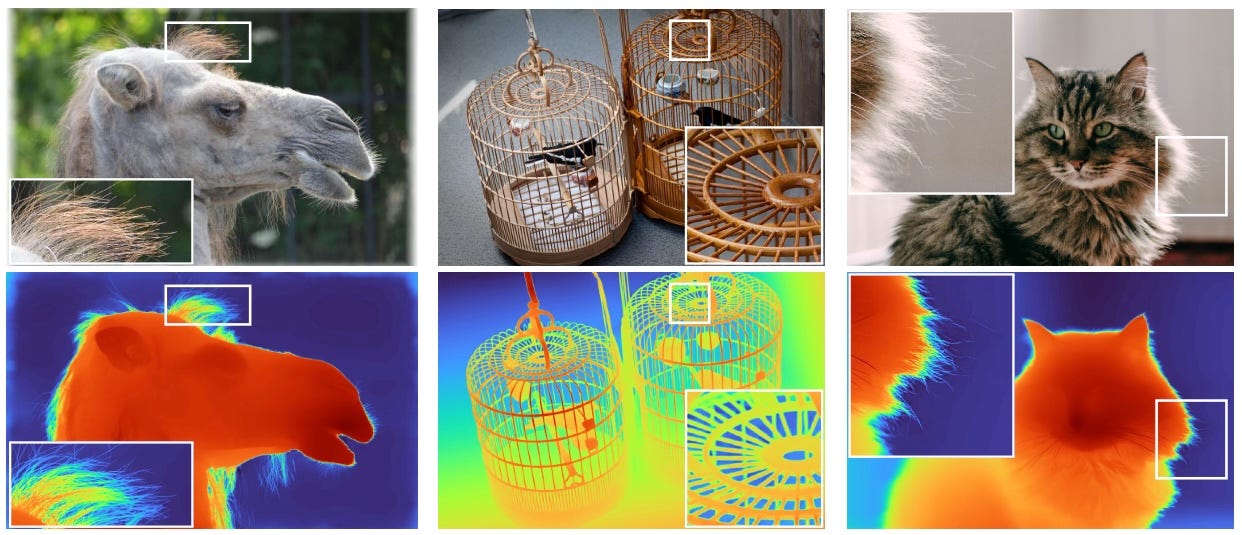

Depth Pro generates high-resolution (2.25-megapixel) metric depth maps with absolute scale in just 0.3 seconds on a V100 GPU

It excels at boundary tracing, surpassing previous methods in accuracy and speed. This is demonstrated through significantly higher boundary recall scores on various datasets compared to other leading models

Depth Pro accurately estimates focal length from single images, even without relying on metadata like camera intrinsics

It achieves state-of-the-art zero-shot metric depth estimation accuracy without requiring camera intrinsics as input. This makes it highly versatile for applications like novel view synthesis "in the wild".

What’s next?

The authors acknowledge limitations in dealing with translucent surfaces and volumetric scattering, where defining single-pixel depth is challenging. This suggests an area for future research and improvement.

So essentially,

We can get depth maps from normal photos in less than a second

Learned something new? Consider sharing it!