Democratizing Medical LLMs

Apollo-MoE is a medical LLM available in 50 languages

Paper: Efficiently Democratizing Medical LLMs for 50 Languages via a Mixture of Language Family Experts (25 Pages)

Github: https://github.com/FreedomIntelligence/ApolloMoE

Researchers from The Chinese University of Hong Kong, Shenzhen are interested in development and evaluation of Apollo-MoE, a family of multilingual medical large language models (LLMs).

Hmm..What’s the background?

The goal of these models is to make medical knowledge accessible to a wider range of people by supporting many different languages.

One major challenge for developing multilingual LLMs is the scarcity of medical data in languages other than English. Existing multilingual medical models typically focus on a small number of languages.

Ok, So what is proposed in the research paper?

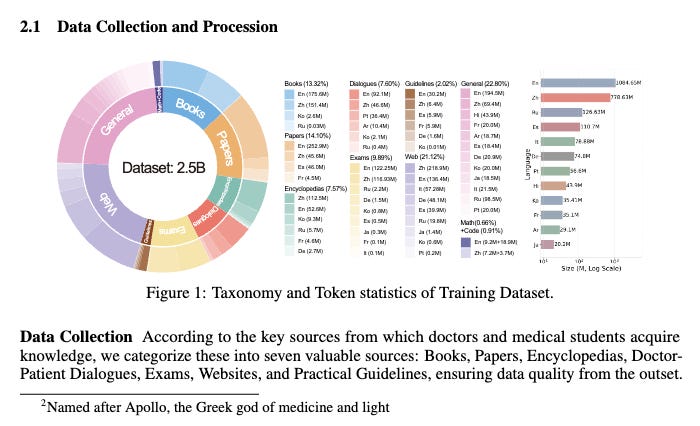

To address this, the authors of the sources first constructed a high-quality medical dataset in 12 major languages: English, Chinese, German, Portuguese, Spanish, French, Russian, Hindi, Italian, Korean, Japanese, and Arabic.

They assembled a dataset from diverse sources such as books, papers, encyclopedias, doctor-patient dialogues, exams, websites, and practical guidelines in 12 languages. Then performed rigorous quality checks and ablation studies to ensure the quality of this dataset.and attention sinks and can operate effectively with a reduced KV cache.

The Apollo-MoE models, which utilize language family experts, achieved state-of-the-art performance on benchmarks in both major and minor languages, outperforming existing open-source models with similar or even larger parameter sizes.

What’s next?

The current study focused on models up to 7B parameters. For larger models, further research is needed to determine the ideal number of layers to expand for optimal performance.

Apollo-MoE is a medical LLM available in 50 languages

Learned something new? Consider sharing it!